LONDON — Several tech companies are offering new artificial intelligence (AI) solutions to estimate the age of people accessing websites, taking advantage of potentially lucrative opportunities for enabling compliance with the U.K.’s new Online Safety Act and similar legislation moving forward in the EU and the U.S.

According to a report by The Telegraph newspaper, Google’s AI age estimation system using facial recognition technology was stealthily approved by the U.K. media regulator Ofcom.

Although Google “has never revealed that it plans to use the technology,” the report notes, “the company has appeared on a registry of providers approved by the Age Check Certification Scheme (ACCS), the UK’s programme for age verification systems.”

According to the conservative newspaper, the tech giant developed its “selfie scanning software” to prepare for a “porn crackdown.”

“It is one of the proposed ways that internet users could verify they are old enough to access adult sites under new online safety laws,” the report explains.

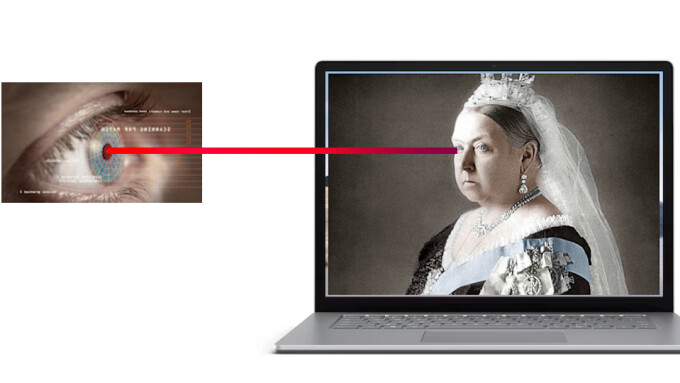

The new technology reportedly utilizes phone cameras to capture the faces of users in order to offer an estimation of their likely age.

Google claims that the technology is 99.9% reliable in identifying whether a photo depicts someone under the age of 25. Those under the age of 25 could be asked to provide additional ID.

“The prospect of Google scanning faces to grant access to sensitive websites would likely raise privacy concerns given the trove of data the company already gathers on web habits,” The Telegraph notes.

As XBIZ reported, earlier this month Ofcom — the government authority tasked by the U.K.’s recently enacted Online Safety Act with online content restriction enforcement — issued its first guidance to adult websites regarding age verification.

The guidance suggests acceptable age verification methods, including open banking, photo identification matching, facial age estimation through some unspecified manner of software, mobile network operator age checks, credit cards checks and some form of digital identity wallet.

Virtually all online privacy and digital rights groups worldwide have expressed serious concerns about the Online Safety Act, and about the increased content censorship powers it grants Ofcom.

Abigail Burke, of digital rights nonprofit Open Rights Group, told the Financial Times that the guidelines “create serious risks to everyone’s privacy and security.”

The potential consequences of data being leaked, Burke added, “are catastrophic and could include blackmail, fraud, relationship damage and the outing of people’s sexual preferences in very vulnerable circumstances.”

A Gold Rush for Age Estimation Solution Providers

Other AI solution providers have already entered the burgeoning age estimation marketplace. Meta and OnlyFans employ Yoti, which The Telegraph reports “automatically deletes images once their age has been estimated.”

Last week, unified identity platform Persona and Trusted Vision AI provider Paravision unveiled their new partnership on an AI age estimation and verification solution.

“Based on Paravision’s AI Principles and Persona’s mission to humanize digital identity, this solution is ethically built and trained on a diverse set of data, as well as rigorously audited to detect and mitigate bias,” the companies touted through a press release.

Paravision and Persona noted that the need to conduct age verification has now expanded from fraud prevention to include “social networks, gaming, and other online platforms as children spend an increasing amount of time online.”

The companies referred specifically to new legislation introduced globally to “restrict children’s access to harmful or otherwise inappropriate content,” such as the bipartisan Kids Online Safety Act (KOSA) in the U.S. and the Online Safety Act.

Paravision Chief Product Officer Joey Pritikin emphasized that “the need for reliable, responsible age estimation technology has never been more pressing, particularly in light of the growing concerns around children’s online presence as well as leveraging ethical approaches to AI.”

Persona Head of Identity Products Daniel Lee enthused, “It is encouraging to see lawmakers pushing platforms, and therefore their identity solution providers, towards greater innovation and responsibility. The mandate is clear: we must balance the delivery of high-assurance, unbiased solutions with safeguarding end user privacy. We believe our industry-leading solution will help our customers better deliver trusted services, while complying with age verification regulations, fighting fraud, and keeping users safe.”